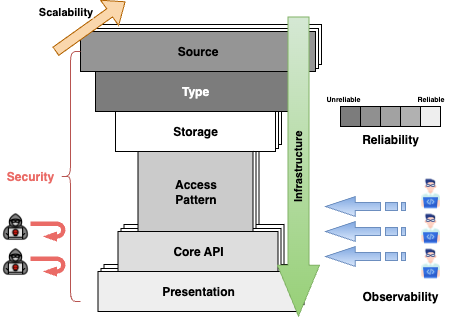

Hourglass Design

The Hourglass Design is something I came up with as a way to think about system design without falling into endless loops of detail. At the top sit the Sources—devices, services, or users—each producing data in different forms and rhythms. That raw input is defined by its Type, the schema, format, and encoding that determine how data is understood and carried forward. From there, the flow narrows through the Access Pattern, where you decide how data can be searched, filtered, or aggregated, and through the Core API that governs how the business interacts with Storage. This middle is the waist of the hourglass: a stable contract that gives freedom to change databases, pipelines, or infrastructure without rewriting the entire system.

At the bottom is the Presentation layer, where data is delivered outward again—whether to people (UI) or to other systems (External APIs). Around the glass, the cross-cutting forces—Scalability, Security, Reliability, Observability, and Deployment—define how well each component can expand, resist failure, and stay operable in practice. The shape is simple but powerful: many inputs narrow through one clear contract before expanding again into many outputs.

| Critical Question | Impact on Design |

|---|---|

| Source (Data Origin & Ingress) | |

| Who are the producers (IoT, user, service)? | Protocol & scale: MQTT/Kafka for IoT; REST/gRPC for apps; webhooks for partners. |

| Push or pull? | Push → broker/queue; Pull → scheduler/poller with backoff. |

| How frequent (stream vs batch)? | Stream → streaming pipeline & backpressure; Batch → ETL windows & SLAs. |

| Can sources pre-filter or pre-compute? | Yes → lower ingest cost/noise; No → server-side filtering & enrichment. |

| Are sources uniquely identifiable? | Stable IDs enable partitioning, idempotency, and lineage. |

| Type (Schema, Format, Encoding) | |

| Is schema enforced? | Yes → SQL or registry (Avro/Protobuf); No → JSON/NoSQL/object storage. |

| Narrow or wide payload? | Narrow → time-series stores; Wide → columnar OLAP. |

| Compact or human-readable? | Compact (Avro/Protobuf) vs readable (JSON/CSV) affects cost & DX. |

| Nested or flat values? | Nested → JSONB/NoSQL; Flat → relational with indexes. |

| Storage (Scale, Structure, Retention) | |

| Daily volume & retention? | High/long → tiered storage (S3 + hot DB) & lifecycle policies. |

| Write pattern: hot or cold? | Hot → append logs/stream DB; Cold → relational with indices. |

| Mutable or immutable? | Mutable → versioning/locks; Immutable → event store/compaction. |

| Joins vs time filters? | Joins → relational; Time-range → TSDB/partitioned tables. |

| Consistency needs? | Strong → RDBMS; Eventual → NoSQL/object storage. |

| Access Pattern (Read Behavior & Consumers) | |

| Real-time, periodic, or ad-hoc? | Real-time → cache/precompute; Ad-hoc → OLAP/query planner. |

| Read by ID, time, or search? | ID → key-value; Time → TSDB; Search → inverted index. |

| Global or scoped (user/region)? | Partitioning, row-level access, tenant isolation. |

| Do consumers need aggregates? | Pre-aggregated/materialized views improve latency & cost. |

| Core API (Interface & Protocols) | |

| User-triggered or system-triggered? | User → REST/GraphQL; System → webhooks, MQTT, Kafka. |

| Is real-time push needed? | Yes → WebSocket/SSE/MQTT; No → polling with ETags & caching. |

| Precompute or on-demand? | Precompute → Redis/materialized views; On-demand → compute budget. |

| Large/batch downloads? | Async job + signed URL; otherwise paginated endpoints. |

| Presentation (Frontend/Analytics) | |

| Need low-latency/live updates? | Push or fast polling; optimistic UI. |

| Large lists/maps? | Pagination, infinite scroll, viewport virtualization. |

| Advanced search/filter? | Search engine (Typesense/Meilisearch/Elasticsearch). |

| Offline or flaky networks? | Service Workers, IndexedDB/LocalStorage, sync queues. |

| Personalized views? | RBAC + query-level filters. |

| Security (Auth, Privacy, Protection) | |

| Who can access what? | Public read vs private scopes; IAM/JWT/API keys; WAF. |

| Tenant or user boundaries? | Row-level security, per-tenant schemas/keys, encryption. |

| Is access audited? | Append-only audit logs, forwarding & retention. |

| Abuse protection? | Rate limits, CAPTCHA, throttling/backoff. |

| Scalability (Throughput & Growth) | |

| Growth: linear or exponential? | Plan sharding/partitions early; avoid single-writer bottlenecks. |

| CPU, memory, or I/O bound? | Match scaling: workers, queues/backpressure, caches/batching. |

| Horizontal scale possible? | Stateless services, partitioned DBs, idempotent handlers. |

| Natural partition keys? | Device ID, region, tenant → predictable scale-out. |

| Reliability | |

| Impact of a component failure? | Retries with jitter, fallbacks, circuit breakers, graceful degradation. |

| Are retries safe? | Idempotency keys, sequence numbers; avoid duplicate side effects. |

| Durability vs availability? | Sync replication/WAL/backups vs looser RPO/RTO. |

| Dependency isolation? | Bulkheads, timeouts, rate limits, queues. |

| Observability (Monitoring, Logging, Tracing) | |

| Alert on the right signals? | Golden signals & anomaly detection; dead-man’s switch for silence. |

| Trace across services? | Correlation IDs, OpenTelemetry, X-Ray/Jaeger. |

| Structured, centralized logs? | ELK/Loki/Datadog; consistent fields & sampling. |

| Business KPIs as metrics? | Emit product KPIs alongside infra metrics. |

| Infrastructure | |

| Cloud, hybrid, or on-prem? | Managed services vs portable containers/orchestration. |

| Multi-region? | Global DNS, replication strategy, active-active/DR. |

| IaC & CI/CD? | Terraform/CDK + pipelines; policy as code. |

| Release safety? | Canary, feature flags, rollbacks; infra drift detection. |

System Design Scenarios

These scenarios illustrate how to apply the Hourglass Design Method to real-world systems, from IoT to social platforms to eCommerce.

Each table follows the same structure: Block → Design Choice → Justification.

Scenario 1: Realtime Temperature Monitoring (IoT Sensors)

Goal: Build a system for 1M IoT devices reporting temperature every 10s across NSW.

- Realtime heatmap (~10s latency)

- Historical dashboard (daily/weekly/monthly)

- Retention: 6 months

| Block | Design Choice | Justification |

|---|---|---|

| Source | MQTT protocol, 1M IoT devices JSON payload: { device_id, timestamp, temperature } |

MQTT is lightweight, supports millions of persistent low-power clients |

| Type | Structured time-series, fixed schema JSON at ingest → binary at storage |

Efficient parsing and optimized storage |

| Storage | Realtime table (~16 MB) Daily aggregation (~2.9 GB / 180 days) Metadata (~20 MB) Total ≈ 3.5 GB |

Tiered storage: hot (real-time) vs cold (aggregates) |

| Preprocessing / Compute | Realtime updates per reading Daily min/max aggregation Redis for fast compare Batch writes → TimescaleDB |

Low latency ingest + efficient aggregation |

| Core API | REST polling every 10s (map) REST queries (historical) |

Polling is simple, cost-efficient for low concurrency |

| Presentation | Web map grid updated every 10s Historical dashboard with calendar filter |

Lightweight visualization for end users |

| Security | No login API throttling (CloudFront + WAF) MQTT cert-based auth |

Basic protection, open data model |

| Scalability | ~100K writes/sec Kafka/Kinesis buffer Partition DB by device_id + time Stateless API, autoscaling |

Horizontal scalability and decoupling |

| Reliability | MQTT at-least-once Retry pipeline Re-runnable daily jobs |

Ensures data completeness under failure |

| Observability | Metrics: ingest rate, write latency, last_seen Logs: ingestion + API |

Full visibility into data pipeline health |

| Infrastructure | AWS IoT Core or EMQX → Kinesis/Kafka → ECS/Fargate → TimescaleDB IaC: Terraform/CDK |

Cloud-native, modular, reproducible |

Scenario 2: Twitter-like Microblogging Platform

Goal: Design a social platform similar to Twitter.

- Realtime feed updates

- Millions of posts/day

- Support search, hashtags, user timelines

| Block | Design Choice | Justification |

|---|---|---|

| Source | User posts (tweets), likes, follows Ingest via REST API + WebSockets |

REST for write operations; WebSocket for live updates |

| Type | JSON payloads (id, user_id, timestamp, text, media_url) Hashtags/mentions indexed |

Schema is semi-structured but consistent enough for indexing |

| Storage | OLTP DB (Postgres/CockroachDB) for metadata Object store (S3) for media ElasticSearch for search/index |

Separate transactional vs. search workloads |

| Preprocessing / Compute | Fanout service builds timelines Kafka for async event distribution |

Decouples writes from personalized feed building |

| Core API | REST (post, follow) WebSocket/GraphQL (feed updates) |

REST reliable for writes; streaming API for low-latency feeds |

| Presentation | Web + mobile apps Infinite scroll timeline, notifications |

Optimized UX for engagement |

| Security | OAuth2 login Rate limiting (API Gateway) WAF for spam |

Standard identity + abuse protection |

| Scalability | Sharded user/tweet DB CDN for media Async fanout to caches |

Ensures horizontal scale to millions of users |

| Reliability | Durable Kafka log Retry for writes Timeline cache fallback |

Feed always eventually consistent |

| Observability | Metrics: post latency, fanout lag Logs: auth, API, feed delivery |

Critical for SLO monitoring |

| Infrastructure | AWS: API Gateway + Lambda/ECS, DynamoDB/Postgres, S3, ElasticSearch | Mix of serverless + managed DB for scale |

Scenario 3: eCommerce Platform

Goal: Design a modern eCommerce system.

- Product catalog, cart, checkout

- User accounts, payments

- Scalable search + inventory

| Block | Design Choice | Justification |

|---|---|---|

| Source | Users browsing, adding to cart, checkout actions External payment gateway callbacks |

Standard REST ingestion with webhook integration |

| Type | Structured JSON for users/products Catalog with categories, variants |

Strong schema required for payments + orders |

| Storage | RDBMS (Aurora/MySQL) for orders/payments DynamoDB for cart sessions S3 for product media |

Transactional consistency for payments; NoSQL for ephemeral cart |

| Preprocessing / Compute | Inventory service decrements stock Async order events via SNS/SQS |

Event-driven ensures reliable order flow |

| Core API | REST (catalog, cart, order) GraphQL (flexible queries for product search) |

REST for critical workflows; GraphQL for frontend flexibility |

| Presentation | Web + mobile storefront Search, cart, checkout flows |

Responsive UX, optimized conversions |

| Security | OAuth2 login, MFA for admin PCI-DSS compliant payment handling WAF + Shield |

Protects sensitive user/payment data |

| Scalability | Autoscaling ALB/NLB ElasticSearch for catalog search CDN for static assets |

Handles traffic spikes during sales |

| Reliability | Multi-AZ RDS Order queue with DLQ Event replay for payments |

Ensures orders are never lost |

| Observability | Metrics: checkout latency, error rate Logs: API + payment gateway |

Monitors user impact and failures |

| Infrastructure | AWS: ALB + ECS, Aurora, DynamoDB, S3, ElasticSearch, CloudFront | Mix of managed + serverless services for resilience |

Scenario 4: Short URL Service (URL Shortener)

Goal: Map long URLs to short codes with low latency, high write QPS, and massive read QPS.

- Create short code, redirect instantly

- Unique codes, collision-resistant

- Analytics (clicks, geo, referrer)

| Block | Design Choice | Justification |

|---|---|---|

| Source | REST API: POST /shorten, GET /{code} |

Simple CRUD over HTTPS; easy client integration |

| Type | JSON: { long_url, owner_id, ttl } |

Minimal schema for fast validation and storage |

| Storage | DynamoDB (PK=code) for mapping; S3 for logs | Single-digit ms reads; elastic scale; cheap analytics storage |

| Preprocessing / Compute | Code gen via base62/ULID; optional custom alias; async analytics (Kinesis) | Collision avoidance; decouple hot path from analytics |

| Core API | REST + 301/302 redirect; rate-limits per owner | Browser-native redirect semantics; abuse protection |

| Presentation | Simple web console + CLI; QR export | Low-friction creation and sharing |

| Security | Auth (API keys/OAuth); domain allowlist; malware scanning | Prevents phishing/abuse; protects brand domains |

| Scalability | CloudFront → Lambda@Edge redirect cache; hot keys sharded | Edge-cached redirects minimize origin load/latency |

| Reliability | Multi-Region table (global tables); DLQ for failed writes | Regional failover; durable retry |

| Observability | Metrics: p50/p99 redirect latency, 4xx/5xx; click streams | Track UX and abuse; support analytics |

| Infrastructure | API Gateway + Lambda, DynamoDB, Kinesis, S3, CloudFront, WAF | Serverless, cost-efficient at any scale |

Scenario 5: Search Engine (Vertical Site/Search Service)

Goal: Index documents/webpages and provide full-text search with filters, ranking, and autosuggest.

- Ingest & crawl sources

- Index fields + vectors

- Query: keyword + semantic, filters, facets

| Block | Design Choice | Justification |

|---|---|---|

| Source | Crawler / webhooks / batch uploads (S3) | Multiple ingestion modes for coverage and freshness |

| Type | JSON docs: title, body, facets, embedding | Supports keyword and vector (semantic) search |

| Storage | OpenSearch/Elastic (inverted index) + vector index; S3 cold store | Hybrid BM25 + ANN; cheap archive |

| Preprocessing / Compute | ETL: clean, dedupe, tokenize, embed; incremental indexing | Higher relevance; fast refresh with partial updates |

| Core API | Search REST: q, filters, sort; autosuggest endpoint | Standard search UX; low-latency responses |

| Presentation | Web UI: search box, facets, highlighting; pagination | Discoverability and relevance feedback |

| Security | Signed requests; per-tenant filter; index-level RBAC | Isolation and least privilege |

| Scalability | Sharded indexes; warm replicas; query cache/CDN for hot queries | Throughput and low tail latency |

| Reliability | Multi-AZ cluster; snapshot to S3; blue/green index swaps | Safe reindex; fast recovery |

| Observability | Metrics: QPS, p99, recall@k/CTR; slow logs; relevancy dashboards | Quality and performance tuning |

| Infrastructure | ECS/EKS crawlers, Lambda ETL, OpenSearch, S3, API Gateway, CloudFront | Managed search + serverless ETL |

Scenario 6: Ride-Sharing (Dispatch & Matching)

Goal: Match riders ↔ drivers in real time with ETA estimates, pricing, and tracking.

- High write (location updates) + low-latency reads (nearby drivers)

- Geo-index + surge pricing

- Trip lifecycle events

| Block | Design Choice | Justification |

|---|---|---|

| Source | Mobile apps (drivers/riders) → gRPC/HTTP + WebSocket | Bi-directional updates; efficient on mobile |

| Type | JSON/Protobuf: lat/lon, speed, status; trip events | Compact on-wire; structured for stream processing |

| Storage | Redis/KeyDB (geo sets) for live locations; Postgres for trips/payments; S3 for telemetry | Fast geo-nearby; durable transactional store |

| Preprocessing / Compute | Stream (Kafka): location smoothing, ETA calc, surge pricing; ML for ETA/dispatch | Low-latency decisions; adaptive pricing |

| Core API | REST: request/cancel trip, quote; WebSocket: live driver ETA/track | Seamless UX for requests + realtime updates |

| Presentation | Mobile map with live driver markers; push notifications | High-frequency updates with low battery impact |

| Security | JWT auth; signed location updates; fraud detection rules | Protects users and platform integrity |

| Scalability | Region-sharded dispatch; partition by city/zone; edge caches for maps/tiles | Reduces cross-region chatter; scales horizontally |

| Reliability | Leader election per region; idempotent trip ops; DLQs for events | Failover and consistent trip lifecycle |

| Observability | Metrics: match time, cancel rate, ETA error; traces for dispatch path | Operational and model quality monitoring |

| Infrastructure | API Gateway + ECS/EKS, Redis Geo, Kafka, Postgres/Aurora, S3, CloudFront, Pinpoint/SNS | Mix of in-memory geo + durable stores |