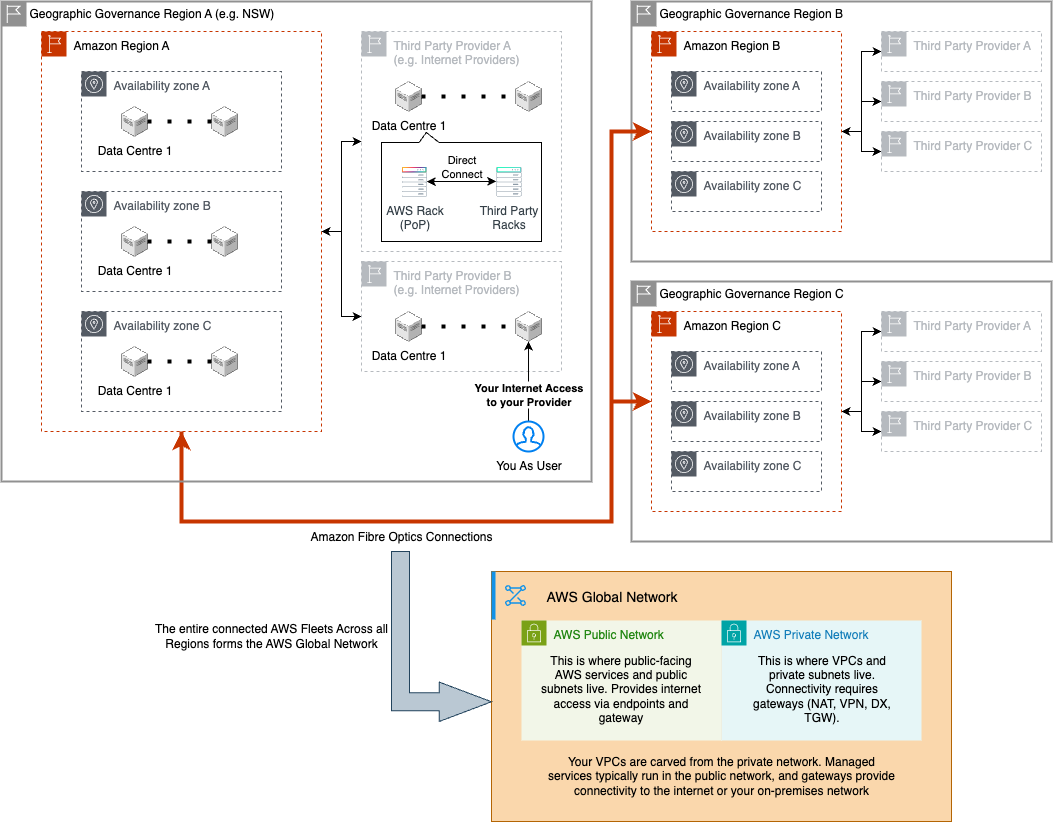

1. AWS Network Overview

AWS operates one of the largest private fiber-optic backbones in the world. This backbone connects Data Centers, groups them into Availability Zones (AZs), and links multiple AZs to form a Region. From there, AWS extends outwards through Points of Presence (PoPs), which connect to the public internet or provide private connectivity via Direct Connect.

- Data Centers, AZs, and Regions

- Data Centers are the physical foundation.

- A group of data centers = an Availability Zone (AZ).

- Several AZs = a Region, interconnected by the AWS backbone for low-latency, fault-tolerant networking.

- Points of Presence (PoPs)

- AWS racks hosted inside third-party colocation sites (e.g., Equinix, Digital Realty).

- Link the AWS backbone to local ISPs and IXPs.

- Run edge services such as CloudFront (CDN caching) and Global Accelerator (traffic optimization).

- Public Internet Connectivity

- PoPs handle AWS traffic in and out of the public internet.

- Customers reach AWS services through public endpoints (e.g., S3, EC2 APIs).

- Direct Connect (DX)

- A dedicated private link that bypasses the internet.

- Provisioned at a PoP, giving you a physical port on AWS gear.

- Delivers lower latency, higher reliability, and consistent bandwidth for hybrid cloud workloads.

2. Service Categories

2.1 Internet Connectivity (Ingress & Egress)

| AWS Service | Purpose | OSI Layer | Upstream | Downstream | Limitations | Pricing |

|---|---|---|---|---|---|---|

| Internet Gateway (IGW) | Enables VPC to access the internet. | L3 – Network | Public Subnet | Internet | • One IGW per VPC • No filtering (not a firewall) |

Free |

| NAT Gateway | Allows private subnets to reach the internet. | L3 – Network | Private Subnet | IGW / Internet | • Outbound-only (no inbound) • Not HA by default (deploy per AZ) |

Hourly charge + per GB data processing |

| Virtual Private Gateway (VGW) | AWS VPN tunnel endpoint for Site-to-Site VPN. | L3 – Network | On-prem VPN device (CGW) | VPC Route Tables | • One VGW per VPC • Max ~1.25 Gbps per tunnel • Cannot connect multiple VPCs directly |

Hourly VPN + data transfer |

| Customer Gateway (CGW) | Customer-managed device establishing VPN tunnels to AWS. | L3 – Network | On-prem Router/Firewall | VGW / TGW | • Managed by customer • HA depends on design |

N/A (customer hardware cost) |

| Transit Gateway (TGW) | Central router between VPCs, VPNs, and Direct Connect. | L3 – Network | VPCs / VPN / DX | VPCs / VPN / DX | • One RT per attachment • Propagation optional • Default full mesh • TGW Peering static routes only |

Per attachment + per GB data processed |

| Direct Connect (DX) | Dedicated physical link to AWS, bypassing Internet. | L1 – Physical | On-prem Router/Switch | VPC via TGW / VGW | • Provisioning time weeks • No encryption by default • HA requires multiple DX |

Per port-hour + data transfer (lower than internet egress) |

| AWS Service | Purpose | OSI Layer | Upstream | Downstream | Limitations | Pricing |

|---|---|---|---|---|---|---|

| VPC Peering | Connects two VPCs privately. | L2 (abstracted) | VPC A | VPC B | • No transitive routing • Cannot use overlapping CIDRs |

Data transfer per GB (intra-Region cheaper, inter-Region higher) |

| Gateway Endpoints | Route table entry to access S3/DynamoDB via AWS backbone. | L3 – Network | VPC Subnet | S3 / DynamoDB | • Only supports S3 and DynamoDB • One per route table • Supports VPC endpoint policies |

Free |

| Interface Endpoints (PrivateLink) | ENI-based private access to other AWS services or partner services. | L3 / L4 | VPC Subnet / ENI | AWS Service ENI | • Does NOT support S3/DynamoDB (use Gateway instead) • One per AZ for HA • Private DNS overrides service DNS • Supports VPC endpoint policies |

Hourly ENI cost + per GB data processed |

| Route 53 Resolver (.2) | Built-in VPC DNS resolver (`.2` address in every subnet) for public zones and associated private zones. | L3 – Network | EC2 / Lambda / ENI | Internal DNS targets (via `.2`) | • VPC-only (not accessible from on-prem) • No customization • Hybrid DNS requires endpoints |

Free (included with VPC) |

| Route 53 Resolver Endpoints | Extend DNS resolution across hybrid networks: • Inbound – On-prem → VPC resolver • Outbound – VPC → on-prem DNS |

L3 – Network | On-prem DNS or VPC resources | Route 53 Resolver / On-prem DNS | • Requires ENIs in subnets • One per AZ for HA • Adds query latency vs. .2 • Query-based limits |

Hourly ENI cost + query-based pricing |

2.2 Load Balancing and Traffic Distribution

| AWS Service | Purpose | OSI Layer | Upstream | Downstream | Limitations | Pricing |

|---|---|---|---|---|---|---|

| ALB (Application Load Balancer) | Routes HTTP/HTTPS traffic. | L7 / L4 | Internet / CloudFront | EC2 / Lambda / IPs | • HTTP/HTTPS only • No static IPs (unless behind GA) |

Hourly + per LCU + data processed |

| NLB (Network Load Balancer) | Balances TCP/UDP traffic. | L4 – Transport | Internet / Internal VPC | EC2 / IPs | • No advanced routing (L7) • Health checks limited |

Hourly + per LCU + data processed |

| Gateway Load Balancer (GWLB) | Sends traffic to firewalls/appliances. | L3 / L4 | IGW / NLB | Security Appliance | • Appliances must support GENEVE • Adds latency |

Hourly + per LCU + data processed |

| Global Accelerator | Routes global traffic via Anycast IPs. | L4 – Transport | End User | NLB / ALB / IPs | • Not a CDN • No caching |

Per accelerator-hour + data transfer |

2.3 Security and Access Control

| AWS Service | Purpose | OSI Layer | Upstream | Downstream | Limitations | Pricing |

|---|---|---|---|---|---|---|

| WAF | Filters HTTP/HTTPS requests. | L7 – Application | CloudFront / ALB | ALB / API Gateway | • L7 only • Rule limits apply |

Per WCU (rule capacity unit) + requests |

| AWS Shield / Advanced | DDoS protection for infra/apps. | L3–L7 | Internet / Edge | VPC Entry Points | • Shield Standard auto, Advanced = $$ | Shield Std free, Advanced fixed monthly fee |

| ACM | Manages SSL/TLS certificates. | L6 – Presentation | N/A (integrated) | CloudFront / ALB / API GW | • Only ACM-issued certs auto-renew | Free for ACM-managed certs |

| Security Groups / NACLs | Allow/deny traffic at instance/subnet. | L3 / L4 | Client / Peer Service | EC2 / ENI / Subnet | • SG stateful, NACL stateless • NACL rules limit |

Free |

2.4 Edge Services and DNS

| AWS Service | Purpose | OSI Layer | Upstream | Downstream | Limitations | Pricing |

|---|---|---|---|---|---|---|

| CloudFront | Distributes and caches content globally at PoP. | L7 – Application | End Users | ALB / S3 / API GW | • Cache invalidation costs • Regional edge cache not everywhere |

Per request + data transfer out |

| Route 53 | DNS resolution with routing policies. | L7 – Application | End Users | IP / ALB / CloudFront | • Query costs • Geo/latency policies add cost |

Per hosted zone + per query |

2.5 API and Microservice Communication

| AWS Service | Purpose | OSI Layer | Upstream | Downstream | Limitations | Pricing |

|---|---|---|---|---|---|---|

| API Gateway | Expose/manage REST/HTTP/WebSocket APIs. | L7 – Application | Client / CloudFront | Lambda / Service Backend | • Payload size limits • Latency higher than ALB |

Per million requests + data processed |

| App Mesh | Controls service-to-service traffic in a mesh. | L7 – Application | Microservice A | Microservice B | • Envoy sidecar overhead • Complexity |

Per Envoy proxy-hour |

2.6 Core Networking Components

| Component | Purpose | OSI Layer | Upstream | Downstream | Limitations | Pricing |

|---|---|---|---|---|---|---|

| VPC | Isolated virtual network with subnets and routing. | L3 – Network | Internet / VPN | Subnets | • Max 5,000 subnets • CIDR block limits |

Free |

| Elastic Network Interface (ENI) | Virtual NIC attached to resources. | L2 – Data Link | Subnet / VPC | EC2 / Lambda | • Limited ENIs per instance type | Free (included in instance cost) |

3. VPN Connectivity

Hybrid AWS environments typically stretch private address space across on-premises, branch, and cloud networks. AWS supplies both network-level Site-to-Site VPN options and user-level Client VPN so you can choose between full network extensions or individual remote access.

3.1 Site-to-Site VPN with Transit Gateway

This reference design places an AWS Transit Gateway (TGW) at the center of multiple VPCs and an on-premises environment. By tailoring TGW route tables and VPC associations you can allow or deny east-west traffic while still giving every spoke access to the corporate network.

3.1.1 Deployment Workflow

- Step 1: Create TGW and Attachments

- Create TGW (default RT = Route Table A).

- Attach: VPC1, VPC2, and On-premises (via VPN or Direct Connect).

- Notes on VPN connectivity:

- Uses the AWS Global Network, but tunnels still traverse the public Internet.

- Provides 2 resilient public endpoints with 2 IPSec tunnels for redundancy.

- Performance, latency, and consistency vary due to Internet hops.

- Step 2: Configure TGW Route Table & Associations

- By default, all attachments are associated with the TGW’s default route table (Route Table A).

- You can create custom TGW route tables and associate attachments to them for segmentation (e.g., Dev vs Prod).

- For this setup, keep VPC1, VPC2, and On-premises associated to Route Table A, but remove cross-VPC routes so VPC1 ↔ VPC2 traffic is blocked.

- Step 3: Configure VPC Route Tables

- VPC1 RT: Add

0.0.0.0/0→ TGW Attachment (for On-prem). No route to VPC2. - VPC2 RT: Add

0.0.0.0/0→ TGW Attachment (for On-prem). No route to VPC1.

- VPC1 RT: Add

- Step 4: On-premises Integration (Propagation)

- Enable propagation from On-prem → TGW RT so both VPCs can learn routes automatically.

- Ensure On-prem routes are advertised back to TGW.

- Options to connect:

- VGW → CGW (1:1 VPC)

- TGW → CGW (1:M VPCs)

- Accelerated Site-to-Site VPN (TGW only)

- Direct Connect (DX):

- Private VIF → For VPC connectivity via TGW/VGW.

- Public VIF → For AWS public services (not VPC routing).

- Transit VIF → For scaling via TGW to multiple VPCs (enterprise-scale option).

- Step 5: BGP Exchange Sequence (Dynamic VPN)

- On-Prem Router Configures BGP ASN → prepares to exchange routes.

- Advertises Internal Routes → on-prem router sends subnets (e.g.,

192.168.1.0/24). - AWS VPN Gateway Setup → VPN tunnels established.

- BGP Peering → session formed between on-prem router and AWS VPN Gateway.

- Route Advertisement → AWS advertises VPC subnets to the on-prem router.

- Route Learning → on-prem router learns AWS subnets via BGP.

- Traffic to AWS → routed to TGW using BGP-learned paths.

- TGW Forwarding → TGW routes traffic to the correct VPC.

- Return Traffic from AWS → VPC → TGW → VPN tunnels → back to on-prem router.

- Resulting Traffic Flows

- VPC1 → TGW → On-premises ✅

- VPC2 → TGW → On-premises ✅

- VPC1 ↔ VPC2 ❌ (blocked by TGW RT config)

3.1.2 Operational Considerations

- Speed: Maximum throughput is 1.25 Gbps per VPN tunnel.

- Latency: Varies and can be inconsistent, as traffic traverses the public Internet.

- Cost: Hourly charges per VPN connection, plus standard AWS data transfer fees (outbound GB).

- Provisioning Speed: Quick to set up, as VPNs are software-based and do not require physical circuits.

- High Availability:

- Each VPN connection can support two tunnels for redundancy.

- HA depends on having multiple on-premises customer gateways (e.g., in different locations).

- Integration with Direct Connect:

- VPNs can serve as a backup for DX (failover).

- VPN and DX can be used together for hybrid redundancy.

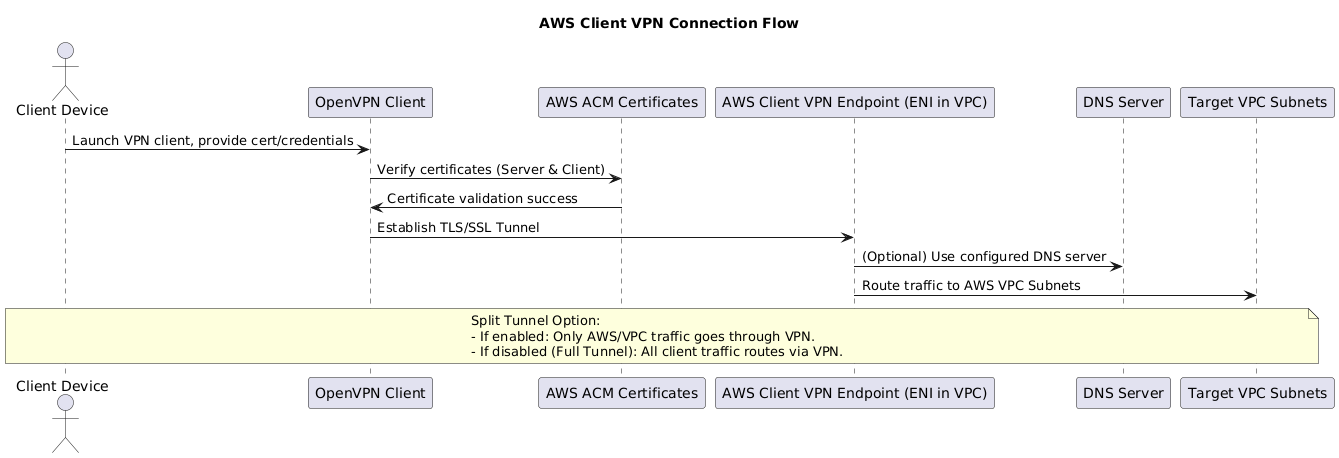

3.2 Client VPN

AWS Client VPN is a fully managed OpenVPN-based service that allows individual users (laptops, developers, admins, remote workers) to securely connect to AWS resources and on-premises networks.

- Purpose:

- Client VPN = User ↔ Network

- Unlike Site-to-Site VPN (which connects entire networks), Client VPN provides user-level access into AWS.

- Architecture:

- Users connect to a Client VPN Endpoint deployed in a VPC.

- The endpoint is associated with one or more subnets (ENIs) across AZs for high availability.

- Billed based on the number of network associations and connected clients.

- Use Cases:

- Remote workforce access to AWS resources in a VPC.

- Secure developer/admin access without requiring a full corporate VPN.

- Extending access into on-premises networks if Client VPN is associated with a TGW or VGW.

- VPN Types:

- Full Tunnel: All traffic (AWS + Internet) routes through the VPN.

- Split Tunnel (not default): Only AWS/VPC traffic routes through the VPN; Internet-bound traffic goes out locally.

- Authentication:

- Certificate-based (via ACM).

- Identity-based (Active Directory, SAML, or federated IdPs).

- Setup Workflow:

- Create Certificates in ACM

- Server certificate for the VPN endpoint.

- Client certificates for users.

- Certificates are used to establish trust between client and server.

- Create Client VPN Endpoint in AWS.

- Associate Subnets (ENIs) across AZs for HA.

- Configure Authorization Rules (which clients can access which networks).

- Add a DNS Server IP (so clients can resolve hostnames inside the VPC).

- Download/OpenVPN configuration file and distribute to clients.

- Clients connect using an OpenVPN-compatible client.

- Create Certificates in ACM

3.2.1 Connection Sequence

4. Route Tables

- VPC Route Tables: Decide routing inside each VPC.

- TGW Route Tables: Decide routing between VPCs, VPNs, and On-prem.

- Association: Each attachment (VPC, VPN, DX) can only be linked to one TGW RT.

-

Propagation: Routes can automatically flow into TGW RTs (e.g., from VPN or On-prem).

- Routing Priority:

- Inside VPC → VPC RT applies.

- Across attachments → TGW RT applies.

- Longest prefix match always wins.

- Static routes take precedence over propagated routes.

- If multiple propagated routes exist, AWS evaluates in this order:

- Direct Connect (DX)

- VPN Static

- VPN BGP

- AS_PATH length (shortest wins)

-

Subnet Association: Each subnet can only be associated with one RT (main or custom).

- CIDR Overlap:

- Use separate RTs per subnet to send traffic to different VPCs with the same CIDR.

- Or rely on more specific prefixes, since routing favors specificity.

- Ingress Routing (Gateway Route Tables):

- Enables inspection/control of inbound traffic flows.

- Previously, RTs only controlled outbound traffic; ingress routing extends this capability for security appliances.

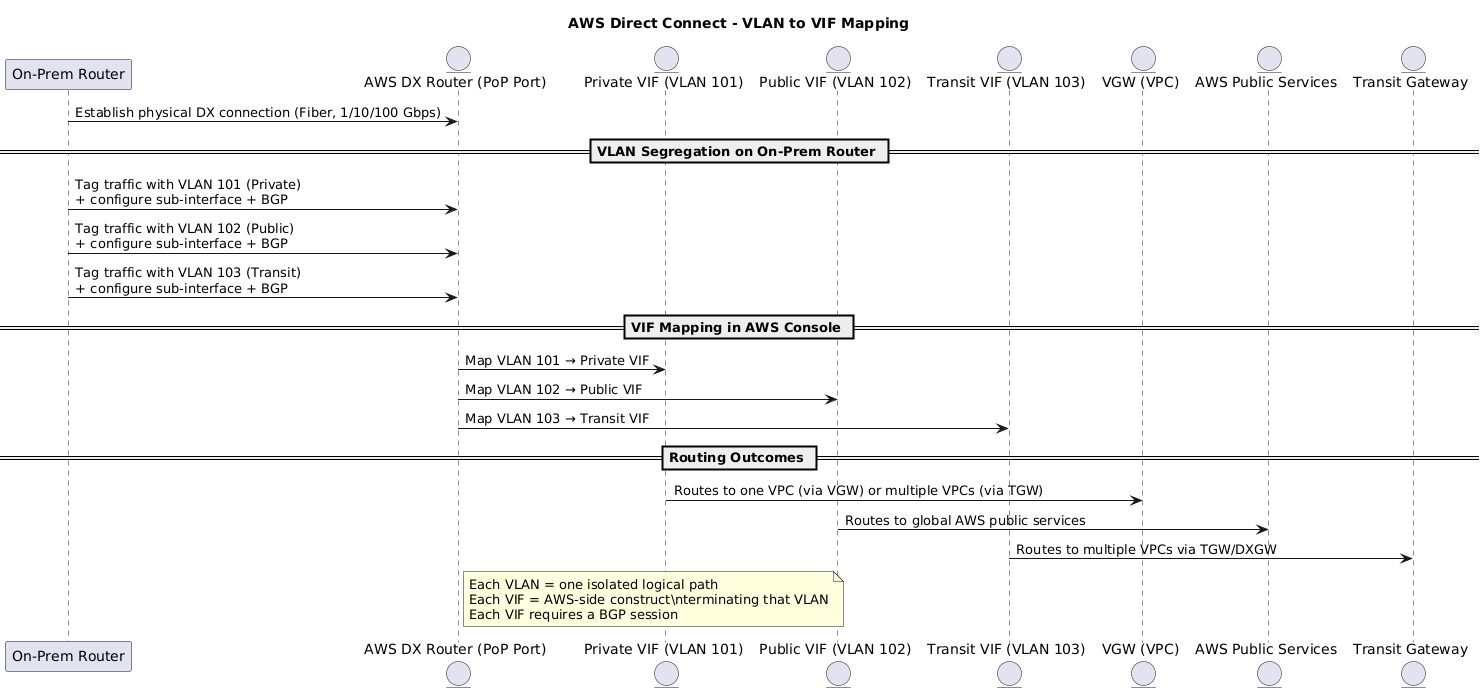

5. Direct Connect

AWS Direct Connect (DX) provides a dedicated, private network connection between your on-premises environment and AWS. Unlike VPN (which traverses the Internet), DX offers consistent latency, predictable bandwidth, and enterprise-grade reliability.

5.1 Direct Connect Physical Architecture

- Port Speeds: 1 / 10 / 100 Gbps

- 1 Gbps →

1000BASE-LX(1310 nm) - 10 Gbps →

10GBASE-LR(1310 nm) - 100 Gbps →

100GBASE-LR4

- 1 Gbps →

- Medium: Single-mode fiber only (no copper).

- Configuration: Auto-negotiation disabled → both ends must manually set speed and full-duplex.

- Routing: Uses BGP with MD5 authentication.

- Optional:

- MACsec (802.1AE) for Layer 2 encryption.

- Bidirectional Forwarding Detection (BFD) for fast failure detection.

5.2 MACsec Security Layer

DX traffic is not encrypted by default. MACsec secures the physical hop between your router and AWS’s DX router at the PoP.

- Scope: Frame-level encryption (Layer 2).

- Guarantees: Confidentiality, integrity, origin authentication, replay protection.

- Performance: Hardware-accelerated, minimal overhead at 10/100 Gbps.

- Mechanism: Secure Channels, Secure Associations, SCI identifiers, 16B tag + 16B ICV.

- Limitations: Not end-to-end; only protects between directly connected devices. Use IPsec over DX if end-to-end encryption is required.

5.3 Direct Connect Provisioning Workflow

- LOA-CFA (Letter of Authorization – Connecting Facility Assignment)

- AWS allocates a port inside their cage at the DX location (PoP).

- You receive LOA-CFA to hand to your provider or colo staff.

- Physical Cross-Connect

- Fiber is patched between your cage/router and the AWS DX router at the PoP.

- Ports are set with matching speed/duplex.

5.4 Direct Connect Virtual Interfaces

DX is a Layer 2 link. To run multiple logical networks, DX uses 802.1Q VLANs, each mapping to a Virtual Interface (VIF) on the AWS side.

- Each VIF = one VLAN + one BGP session.

- On the customer side: configure sub-interfaces (per VLAN) with BGP.

Types of VIFs:

- Private VIF

- Connects to VGW (1 VPC) or TGW (multiple VPCs).

- Used for private VPC IP ranges.

- Region-specific (per DX location).

- 1 VIF = 1 VGW = 1 VPC (unless TGW is used).

- No built-in encryption.

- Public VIF

- Connects to AWS public endpoints (e.g., S3, DynamoDB, STS).

- AWS advertises all public IP prefixes; you advertise your public IPs.

- Global scope (all AWS regions).

- Not transitive: your prefixes are not re-shared by AWS.

- Can combine with VPN for DX + VPN (low latency + encryption).

- Transit VIF

- Connects via DX Gateway (DXGW) → TGW.

- Scales to multiple VPCs across accounts/regions.

- Requires BGP.

- Enterprise-scale hub-and-spoke hybrid connectivity.

5.5 Direct Connect Gateway

- DX is per-region, but DXGW allows sharing across accounts and regions.

- Public VIFs: Can access all AWS regions (since public IPs are global).

- Private VIFs: Normally regional, but DXGW extends them to multiple regions via VGWs or TGWs.

- Enables multi-account, multi-region hybrid architectures.

5.6 Operational Considerations

- Speed: Up to 100 Gbps, depending on DX location/provider.

- Encryption: Not built-in → use MACsec (L2 hop-by-hop) or VPN over DX (end-to-end).

- Resiliency: Use redundant DX links at different PoPs for HA.

- Cost: Port-hour charges + data transfer (cheaper than Internet egress).

- Provisioning: Weeks (physical cabling) vs minutes for VPN.

- Routing Priority:

- Longest prefix match always wins.

- Static > propagated.

- Typically, DX > VPN for the same prefix.

- Best Practice: DX + VPN (over DX Public VIF) = encrypted, resilient connectivity.

5.7 Connection Sequence

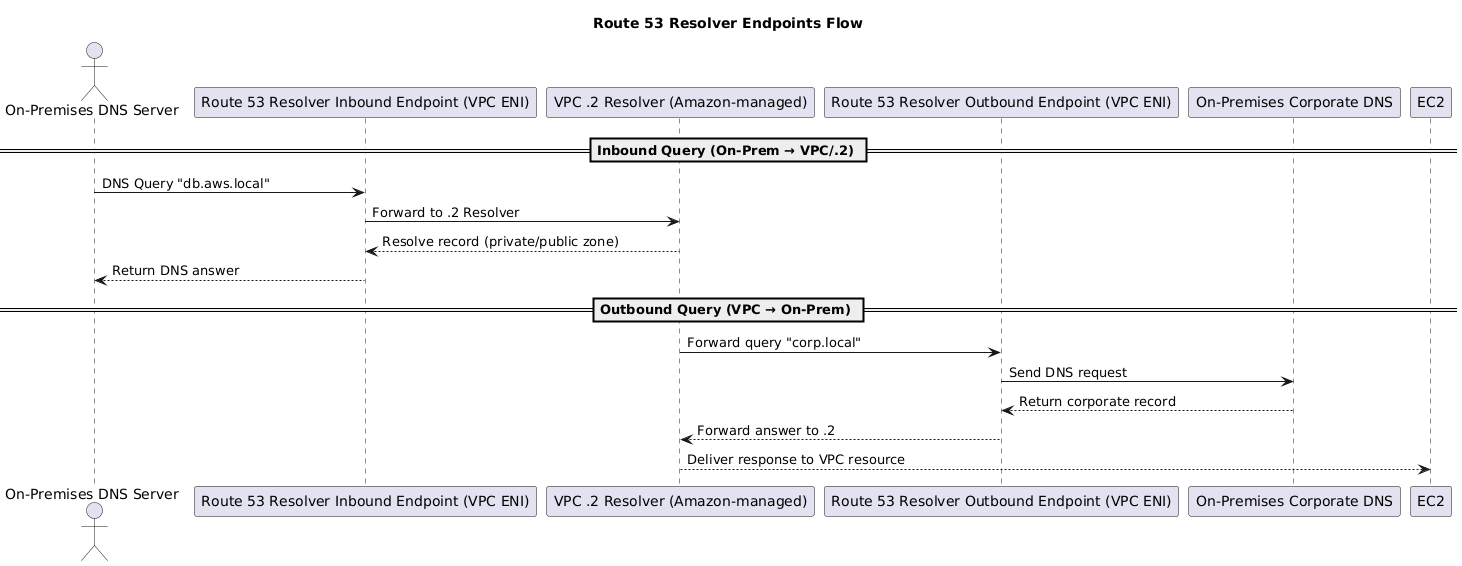

6. Domain Name System (DNS)

DNS (Domain Name System) resolves human-readable domain names (e.g., example.com) into IP addresses or service endpoints. Inside AWS, Route 53 is the managed DNS platform. For refresher material on registries, registrars, zone files, and baseline record types, see Domain Name System.

6.1 Route 53 Record Support

- Public & Private Hosted Zones: Route 53 can authoritatively serve internet-facing domains and split-horizon DNS tied to specific VPCs.

- Standard Record Types: Supports A, AAAA, CNAME, MX, TXT, NS, SOA, PTR, SRV, and CAA records, plus AWS-specific Alias records.

- Alias Records: Behave like CNAMEs but stay inside Route 53. They can point apex domains to AWS resources (ALB, NLB, CloudFront, S3 static sites, API Gateway, etc.) without extra DNS queries and incur no per-query charge.

- Health Checks & Failover: Records can reference Route 53 health checks to withdraw unhealthy endpoints, enabling DNS-level HA across Regions or on-prem targets.

6.2 Apex (Naked) Domains

- A naked domain = root (e.g.,

example.com) without subdomain; RFCs prohibit using a CNAME at the zone apex. - Use Alias A/AAAA records to point the apex at AWS resources (ALB, NLB, CloudFront, API Gateway, S3 website endpoints) while keeping the record type compliant.

- For subdomains (

www.example.com), continue to use CNAME or Alias records depending on whether the target is AWS-managed. - Ensure the alias target lives in the same scope as the hosted zone: public zones can target global AWS endpoints, while private zones alias to in-VPC resources such as NLBs or interface endpoints.

6.3 Route 53 Routing Policies

| Routing Policy | Purpose | Example |

|---|---|---|

| Simple | Single record → single resource | example.com → 192.0.2.1 |

| Weighted | Split traffic by % between resources | 80% → server1, 20% → server2 |

| Latency | Route to lowest-latency region | US users → us-east-1, EU users → eu-west-1 |

| Failover | Primary resource + backup on failure | Main site → backup site |

| Geolocation | Route by user’s country/region | US → 192.0.2.1, UK → 192.0.2.2 |

| Geoproximity | Route by distance + optional bias | East Coast users → NJ DC, West Coast → CA DC |

| Multi-Value | Return multiple IPs for LB/HA | example.com → 192.0.2 .1, 192.0.2.2 |

| IP-based | Route by client IP blocks | Corp IP range → private endpoint |

6.4 DNSSEC Overview

Route 53 supports DNSSEC signing for public hosted zones, letting resolvers verify that responses originated from AWS without tampering. Review the cryptographic principles in DNSSEC basics before enabling it in production.

6.4.1 Enable signing on Route 53 zones

- In the hosted zone, choose Enable DNSSEC signing. Route 53 provisions a managed key-signing key (KSK) in AWS KMS and handles zone-signing keys automatically.

- After signing completes, download the generated Delegation Signer (DS) record that contains the key tag, algorithm, digest type, and digest.

- Route 53 currently signs only public hosted zones; private hosted zones are not supported.

6.4.2 Publish DS at the registrar

- Provide the DS record to your domain registrar so it can publish the delegation in the parent zone. Without this step, resolvers cannot build the chain of trust.

- Confirm the registrar and registry support the chosen algorithm; Route 53 defaults to SHA-256 (algorithm 13).

- Plan for rollover: disabling or re-enabling DNSSEC requires updating or removing the DS record to avoid validation failures.

6.4.3 Validation paths

- Many public recursive resolvers (Google Public DNS, Cloudflare, Quad9) already validate DNSSEC-signed zones. End users gain protection transparently once the DS record propagates.

- The Amazon-provided

.2resolver inside a VPC does not validate DNSSEC today. Use Route 53 Resolver outbound endpoints to forward queries to a validating resolver (e.g., self-managed BIND/Unbound or a third-party service) if validation is required on-premises. - For the full resolver handshake and signature-validation walkthrough, see DNSSEC Validation Flow.

6.5 Route 53 Resolver and Endpoints

By default, every VPC has an Amazon-managed DNS resolver at the reserved IP VPC-CIDR+.2 (e.g., 10.0.0.2).

- Accessible from all subnets in the VPC.

- Resolves public DNS records and private hosted zones linked to the VPC.

- No setup required — it’s built-in.

👉 Limitation: The default .2 resolver only works inside the VPC. It cannot be queried from on-premises networks or other VPCs directly.

To integrate DNS across hybrid or multi-VPC environments, AWS provides Route 53 Resolver Endpoints:

- Inbound Endpoint:

- On-premises DNS servers → query AWS

.2resolver via an ENI in the VPC.

- On-premises DNS servers → query AWS

- Outbound Endpoint:

- VPC resources → forward queries to on-premises DNS servers.

These endpoints solve the DNS boundary problem where VPC and on-prem DNS could not previously resolve each other.

7. IPv6 in AWS

AWS VPCs support dual-stack networking (IPv4 + IPv6). Unlike IPv4, IPv6 is globally unique and publicly routable, which removes the need for NAT but requires careful access control.

7.1 Key Points

- CIDR Allocation:

- Each VPC gets an Amazon-provided /56 IPv6 block.

- Each subnet automatically receives a /64 range (one per subnet, up to 256 subnets per VPC).

- Routing:

- IPv6 routes appear separately in route tables alongside IPv4 routes.

- Works with the same routing constructs (IGW, TGW, VGW, etc.), but must be explicitly added.

- Gateways:

- Internet Gateway (IGW) → allows bidirectional IPv4 + IPv6.

- Egress-Only IGW → outbound-only for IPv6 (blocks unsolicited inbound).

- Service Support:

- Must be enabled per VPC, subnet, and ENI.

- Not all AWS services support IPv6 (check service docs before enabling).

- No NAT Needed:

- NAT Gateway is not required for IPv6 since all IPv6 addresses are globally routable. For IPv4, a NAT Gateway is needed because of address space limitations and the use of address masquerading (NAT) to share public IPs.

- Security relies on Security Groups / NACLs instead of NAT hiding.

7.2 Considerations

- Plan dual-stack carefully: some workloads may remain IPv4-only.

- Use Egress-Only IGW to protect IPv6 workloads from unsolicited inbound traffic.

- Validate which AWS services support IPv6 before rollout (e.g., some managed services may lag behind).

- IPv6 helps with address exhaustion but introduces new security + monitoring challenges.

8. Exam Reminders

- DX virtual interfaces – Private (VGW/TGW to VPC), Public (AWS public endpoints), Transit (TGW). Use Link Aggregation Groups (LAGs) for redundancy/throughput; multiple private VIFs attach to a DX Gateway for multi-VPC reach.

- VPC DNS toggles –

enableDnsSupportlets instances resolve names;enableDnsHostnameslets instances receive hostnames. Both must be on for Route 53 private zones and many managed services (ECS/Fargate). - Endpoints – Interface (most services) vs Gateway (S3/DynamoDB, free). Pick based on traffic pattern.

- Internet plumbing – One IGW per VPC; deploy NAT Gateways per AZ for resilient outbound access; remember subnets are AZ-scoped.

- Routing hubs – Transit Gateway centralizes many-to-many VPC/VPN/DX; VGW is VPC-specific with site-to-site VPN.

- Route 53 quick sheet –

A= IPv4,AAAA= IPv6,CNAMEaliases to another name (not zone apex), Alias targets AWS resources (allowed at apex, free queries). - Reserved subnet IPs –

.0network,.1router,.2DNS,.3future use,.255broadcast → usable addresses = CIDR size − 5. - Security boundaries – Network ACLs are stateless and operate on subnets; Security Groups are stateful on ENIs. For NLB targets, lock down security groups to NLB IP ranges/ports, not URL paths.